By Michael McDonald, Director of Marketing

Image processing and vision-based sensors are fast becoming critical components for driver safety features. Reading traffic signals, street signs and lane divide colors accurately is essential to a vehicle’s ability to process information for safety features like automated braking or vehicle lane assist. But, before we can dive into emerging image processing technology, let’s look at how color filter arrays first began.

Back to Basics – The Bayer Filter

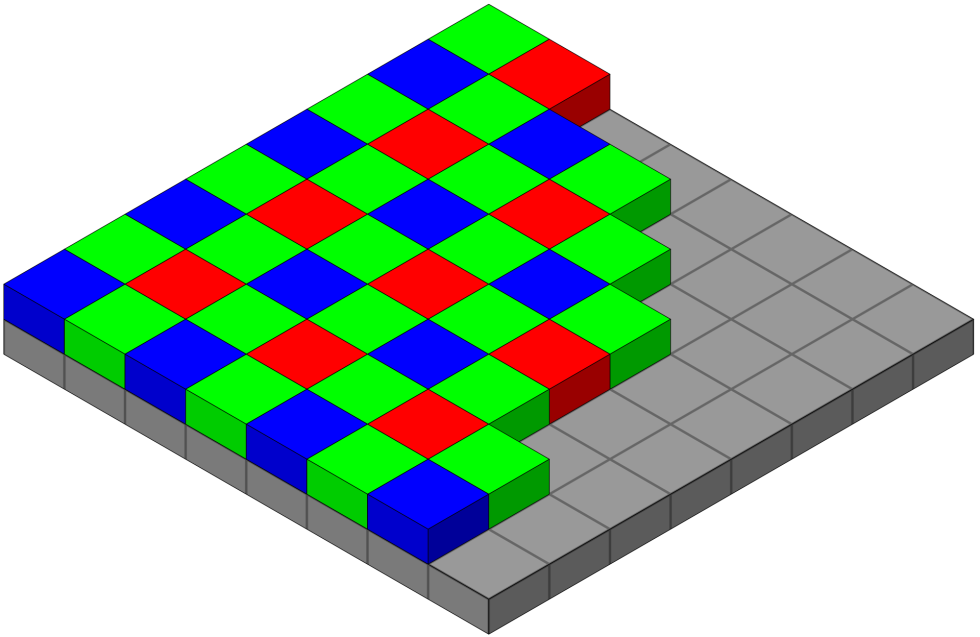

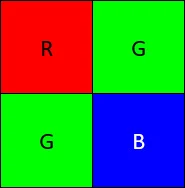

In 1976, Bryce Bayer of Eastman Kodak patented (US Patent 3,971,065) an arrangement of color filter arrays on an image sensor that combines a single red (R) and blue (B) filter along with two green (G) filters (see Figure 1). These filters placed on the cells (or pixels) of the image sensor limit the wavelengths of light that hit the sensor cell. His eponymous invention – what is now known as the Bayer filter – was designed to align with a human’s visual acuity to discern luminance (brightness) detail better than chromatic detail.

The green pixels are more sensitive and therefore collect more signal. Using twice as many green pixels in the array increases the low light performance and provides more edge information (detail) from a scene due to the increase in spatial sampling.

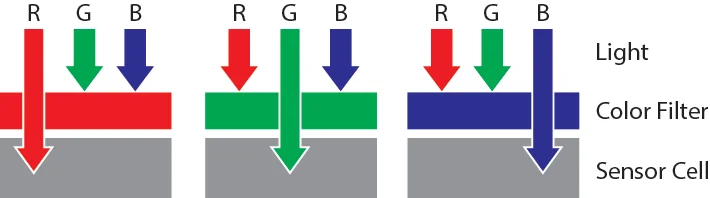

In order to create the remaining colors, Bayer added two “chrominance elements”: a red and blue filter that allow the red and blue wavelengths to pass through to the sensor cell (Figure 2).

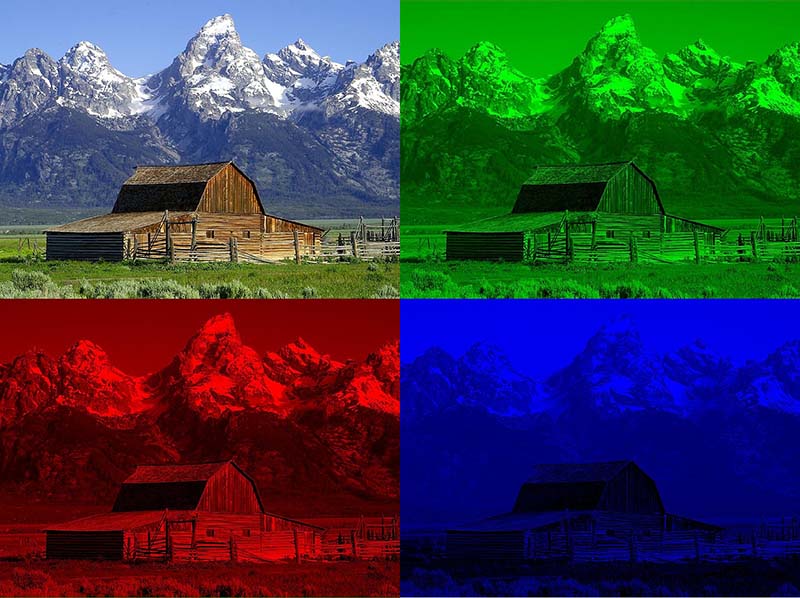

This RGGB sensor cell creates a value that is proportional to the strength of the light in that wavelength. By combining red, green, and blue based on their strength, every color is accurately reproduced (Figure 3). And by using the information in the green cells, the luminance of the image is also accurately reproduced.

Machine learning algorithms identify objects mainly through the image detail provided by the luminance information alone; chrominance information is not needed for many detection algorithms. For example, accurately identifying that a car is present is infinitely more important than identifying the color of a car when deciding whether to brake.

Furthermore, in automotive applications, the ability to operate in low-luminance (low-light) conditions is critical; the camera sensors in the vehicle need to operate at night for the car to “see”. The problem is that image sensor cells are inaccurate in the lowest light conditions; various types of noise (thermal, electronic circuit, “shot noise”) contribute as much to the strength of the signal as the actual light (Figure 4).

One of the biggest challenges for both human and machine vision in the automotive environment is dealing with the challenges of low light. Enter the “clear” pixel, in which the two green color filters are replaced by clear filters which let all light in thereby maximizing the luminance information. This is good for humans, machine learning algorithms, and image sensors.

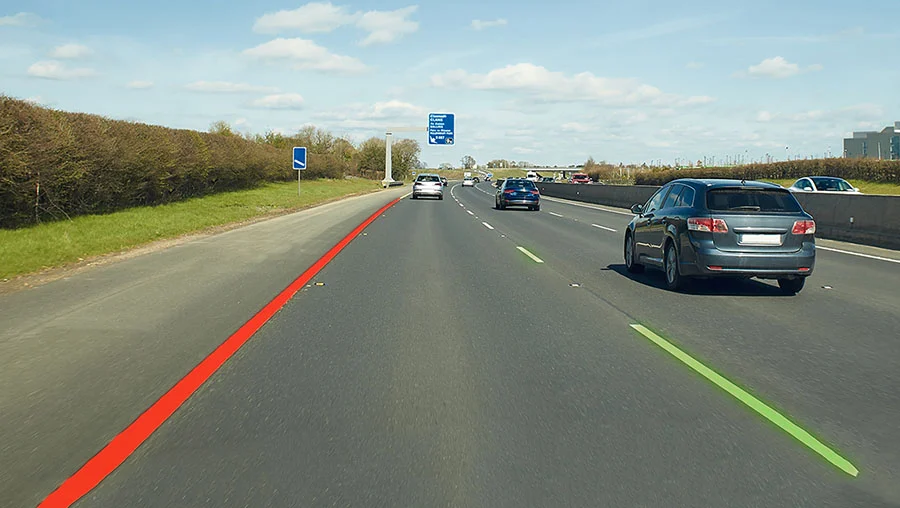

But there is also a downside to the clear pixel, in that it is more difficult to differentiate colors since all colors get through to the sensor with a clear pixel. Accurately discerning color is important to driving and to the smart vehicles that are assisting drivers: red brake lights or a red stop light tells a driver (or the vehicle) to stop; a green signal tells the driver it may proceed; a yellow stripe between road lanes indicates traffic is coming in the other direction in the adjacent lane, while a white stripe indicates it is going in the same direction. Confusing a flashing red and flashing yellow light can be the difference between a car coming to a complete stop or proceeding; and confusing red and orange can cause problems in a construction zone where one means stop and the other usually means slow down. Color is critical to driving, especially the color red!

Understanding Color Filter Arrays Various color filter arrays (CFA) are being explored that maximize luminance using clear pixels, coupled with the addition of other colors that convey critical automotive-relevant colors. In the table below, we review the pros and cons of various color filter arrays.

RGGB = Red-green-green-blue. This CFA provides excellent color reproduction across the visible spectrum. However, in low-light situations, the filters reduce the signal strength (versus a “clear” filter) and the signal-to-noise ratio is reduced.

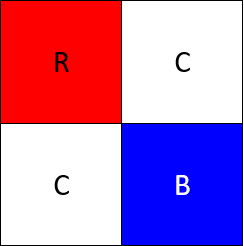

RCCB = Red-clear-clear-blue. “Clear” includes the green wavelengths so RCCB is very similar to RGGB: it provides improved luminance information as well as good color information with the red and blue pixels.

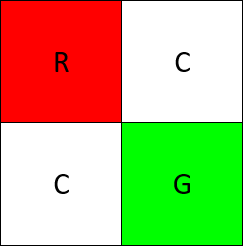

RCCG = Red-clear-clear-green. This CFA is good for differentiating red and green traffic lights. The two clear pixels provide improved luminance information, the color pixels are red and green in this case. The green pixel is more sensitive than the blue that is used in the Bayer Filter and RCCB case which improves low light performance, but removing the blue color filter makes color fidelity more difficult.

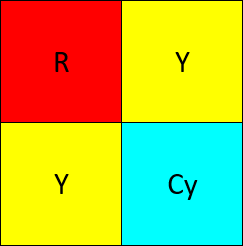

RYYCy = Red – yellow – yellow – cyan (cyan is equal parts blue and green). This CFA is good for differentiating yellow stripes from white stripes. It also contains elements of red, green, and blue (both through the cyan), so it has the components to make all colors. It lacks two clear pixels, however, so it collects less signal than the RCCG or RCCB, but more signal than the Bayer (RGGB).

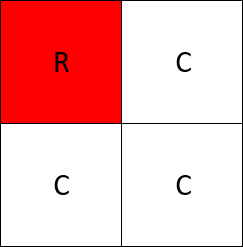

RCCC = Red – clear – clear – clear. This CFA provides more luminance information than any of the other sensor CFA approaches, but it can only definitively identify the color red and cannot reproduce a human viewable color image. Differentiating between yellow and white road markings is more difficult; this could cause a car to enter the wrong lane when turning. Yellow and green traffic signals might also be mistaken for each other, which is also dangerous.

Automotive companies are currently exploring these different CFAs in their efforts to improve automotive camera performance, especially in low light and driver-assist features. However, each of these CFAs has limitations. This is where indie Semiconductor’s advanced image processing solutions come into play.

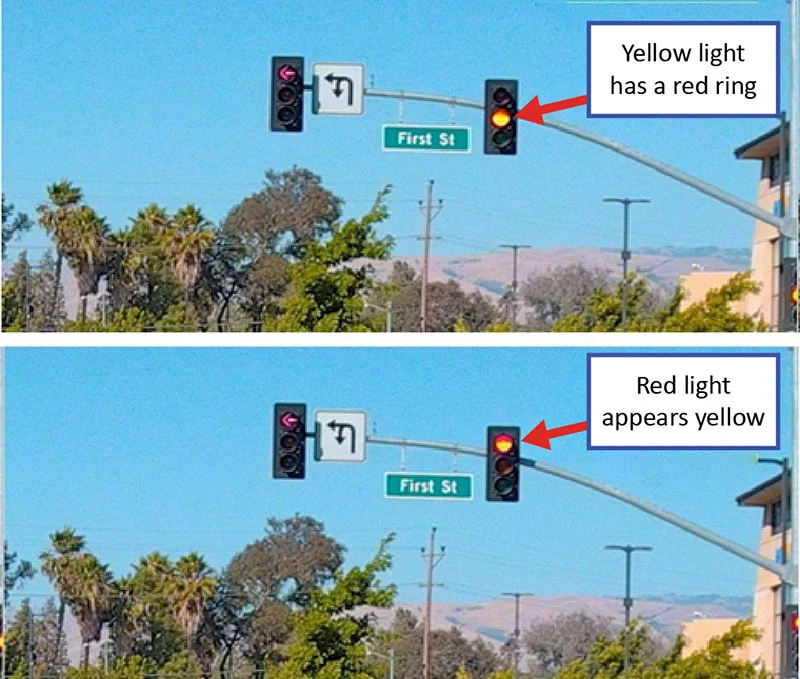

In Figure 5, the image was taken with an RCCB CFA. Note the red component in the yellow traffic light and the yellow component in the red traffic light. This mixing of red and yellow in the traffic light could cause a vehicle to misinterpret the signal and unexpectedly stop, or continue traveling, when it should have stopped. Both scenarios could lead to an accident or injury.

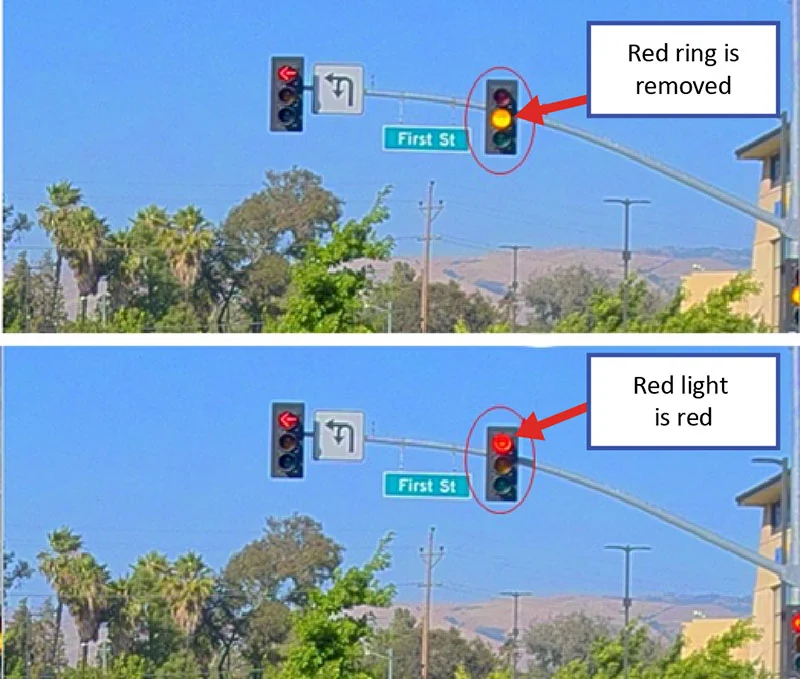

This problem is corrected through the use of indie Semiconductor’s next-generation advanced image processing. In Figure 6, indie’s proprietary image processing technology accurately identifies the red light and makes it red, and similarly makes the yellow light yellow. With improved color fidelity, the machine learning algorithms can better determine whether the vehicle should continue or stop, reducing the risk of an accident.

The ability to process these new color filter arrays, provide better low light performance, and improve color fidelity, are some of the many capabilities that indie provides with its image signal processors. New vehicles are increasing both the number of camera sensors and the resolution of those sensors to see longer distances and provide more detail about the environment in and around the vehicle; this dual increase exponentially drives image processing performance requirements, which indie addresses with vision processing products and an integrated proprietary image processing architecture. Uniquely optimized to perform image processing without additional external memory, indie image processors enable customers to lower system power, cost, and footprint, and decrease latency. The decreased latency translates to faster vehicle response and more time for more processing, both of which enable greater vehicle safety.

It is an architecture that is the cornerstone for the next generation of vehicles.

[i] Image Credit: https://en.wikipedia.org/wiki/Bayer_filter#/media/File:Bayer_pattern_on_sensor.svg

[ii] Image Credit: https://en.wikipedia.org/wiki/RGB_color_model#/media/File:Barn_grand_tetons_rgb_separation.jpg

[iii] Image credit: https://en.wikipedia.org/wiki/Image_noise#/media/File:Highimgnoise.jpg