by Chet Babla, SVP of Strategic Marketing

Understanding the Sensor Modalities Enabling the Uncrashable car

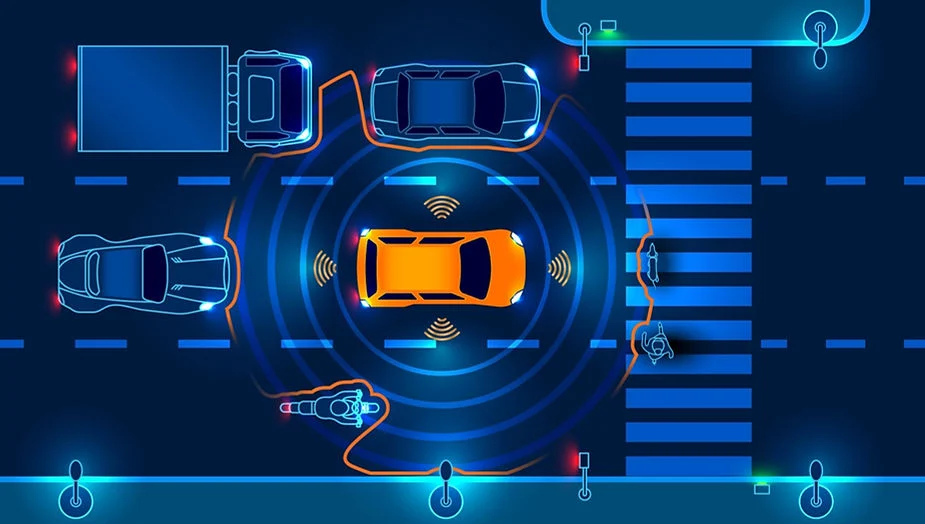

In our last blog, “In Pursuit of the Uncrashable Car,” we identified the need for various sensor modalities to gather data. This data is critical to processing and enabling timely decision-making and ultimately triggers automated safety response actions such as braking, steering, or driver alerts.

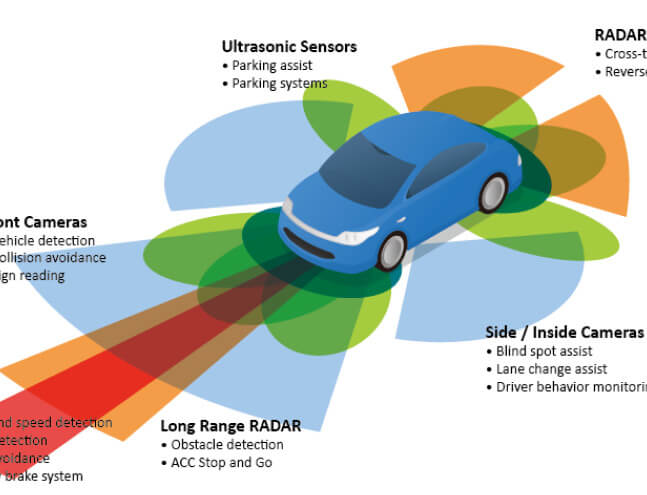

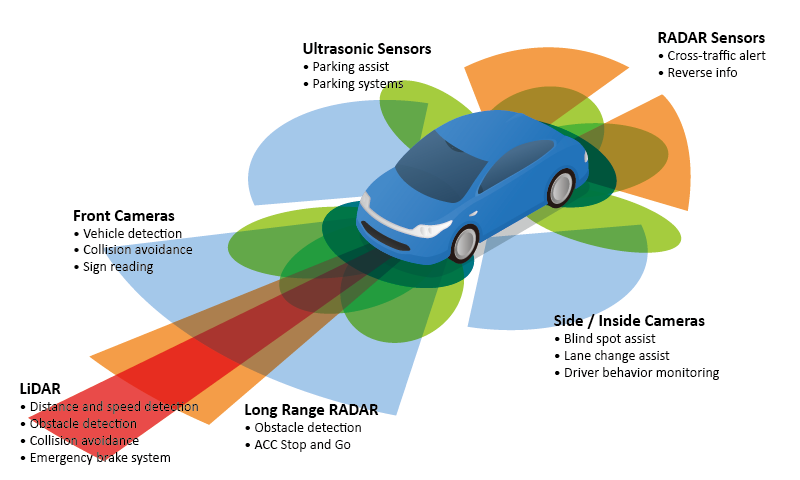

As advanced driver assistance systems (ADAS) and driving automation technologies become more sophisticated they require an enhanced understanding of the vehicle’s environment. In order to achieve this, the number of vehicle sensors capturing data will increase dramatically. The mix of automotive sensor modalities deployed in new and emerging vehicles may include visible spectrum and infrared cameras, short-range and long-range radar, light detection and ranging (LiDAR), and ultrasonic sensors.

Taking a Deeper Look into Sensor Modalities

So, let’s look at some of the key features and use cases for the various sensor modalities, as follows.

Ultrasonic Sensors operate above the human hearing range at around 40kHz. They are now commonly deployed to provide practical, low-cost solutions for low-spatial-resolution and short-range sensing use cases up to a few meters, such as park-assist. A key benefit of ultrasonic sensors is that they are particularly effective due to their resilience in most weather conditions.

Radar Sensors operate in the 20~80GHz radio spectrum and have been deployed in high-end vehicles for collision detection warning since the late 1990s. Depending on the frequency, radar can detect objects and relative speeds from less than a meter to around 200 meters, making it an ideal all-around sensor modality. Radar is now widely deployed for park-assist, automatic emergency braking, adaptive cruise control (ACC), lane change-assist, and blindspot detection. As with ultrasonic sensing, radar sensors are resilient in most weather scenarios.

LiDAR Sensors operate in the 900~1550nm near-infrared spectrum and offer similar capabilities for ADAS use cases as radar. However, LiDAR operating at 1550nm enables spatial resolutions that are 2500 times finer than radar’s 4mm when operating at 77GHz. This means LiDAR is ideal for creating very accurate 3D depictions of a vehicle’s surroundings. Incoherent systems (typically Time of Flight or ToF) and coherent systems (typically frequency modulated continuous wave or FMCW) are the key LiDAR approaches for automotive, each with differing trade-offs. indie believes that coherent LiDAR systems offer significant advantages in range, velocity, and reflectivity measurement over incoherent systems, and are also more resilient to other interfering light sources.

Vision Sensing operates in the visible light spectrum (like human sight) or the infrared spectrum and offers the ultimate spatial resolution. When this superior resolution is combined with color-, contrast- and character-recognition capabilities, vision-based sensing provides a level of scene interpretation, object classification, and driver visualization that other sensing modalities cannot achieve. While the first camera was deployed in a vehicle in the 1950s for reversing awareness, vision-based systems, enhanced by perception-enabled algorithms, are now being used for multiple ADAS use cases. These include surround view, park-assist, driver and occupant monitoring, digital mirrors, autonomous emergency braking (AEB), lane centering, and ACC.

Building Architectures for Multiple Sensor Modalities

As no single sensing modality can meet the requirements of ADAS applications, the industry is moving towards increased use of sensor fusion – the ability to bring together and process inputs from multiple sensors including radars, lidars, and cameras – for vehicles with higher levels of automation. The challenge for vehicle designers is that as modalities increase and the resolutions of each type of sensor continue to improve, the volume of data that needs to be reliably processed in real-time is growing rapidly. As a result, there is a corresponding increase in the computational power required to perform that processing. Moreover, this must be accomplished while keeping the overall system power consumption and bill of materials (BoM) as low as possible.

Automotive engineers can address these challenges using centralized or distributed approaches to the underlying ADAS or autonomous driving processing architecture. Centralized architectures can potentially keep component count low, aid processing latency and may also assist software manageability. But they are challenged by the high bandwidth (i.e. multi-gigabits) required to transport raw sensor data from across the vehicle to the central processing element, and the corresponding level of centralized compute performance needed to process this data. As requirements for computing functions increase, adherence to the power consumption profile – especially for EVs – without requiring elaborate cooling solutions and meeting the target physical form factor will become more difficult.

This is why there is a parallel trend of deploying distributed computing architectures in vehicles. Here, some degree of local pre-processing is integrated in – or close to – the edge sensors prior to sending information to an ADAS domain controller or zonal processor for further aggregation, processing or fusion with other sensor data. This distributed processing approach greatly reduces the computing requirements on a central processor and reduces the need to support multi-gigabit data communication across the vehicle, while also benefiting thermal and form factor restrictions. However, the software management of distributed nodes and the sense-think-act latency needs to be carefully considered.

So which architecture will ‘win’? Of course, the answer is “it depends”! There are pros and cons to each approach, and each OEM/Tier 1 needs to take multiple complex factors into account including platform scalability across their vehicle portfolio. indie’s view is that distributed intelligence has an important role to play in evolving vehicle architectures and is not mutually exclusive with centralized approaches. Indeed, we are observing OEMs deploying a blended combination of distributed/zonal computing together with centralized computing across the vehicle’s E/E architecture as a ‘best of both worlds’ to meet their needs.

Broad Portfolio and a Focused Vision for Sensor Solutions

indie Semiconductor is at the forefront of this edge sensing revolution, offering various solutions to address different sensor modalities. We currently offer a range of sensing products, including, Sonosense™ and Echosense™ ultrasonic ICs, which feature built-in digital signal processing and are being deployed into park-assist systems. Surya™, our latest highly integrated FMCW-based LiDAR SoC, brings unparalleled technical and cost benefits to the broader deployment of LiDAR systems. Our LiDAR solutions are complemented by technology from TeraXion, an indie Semiconductor company and market leader in designing and manufacturing innovative photonic components that indie acquired last year.

Through the recent acquisition of Symeo, Analog Devices’ radar division, and the establishment of our Vision business unit, indie is committed to developing highly innovative solutions, further expanding our sensor portfolio for radar and vision processing applications. And as a validation of progress, indie recently noted that it has secured a top-four Tier 1 radar customer.

By allowing automotive manufacturers to more easily address the integration of multiple sensor modalities and the efficient processing of data from a growing number of sensors, indie Semiconductor is playing a key role in meeting vehicle ADAS and autonomy requirements both now and in the future. The combination of robust, reliable and cost-effective sensing technologies, architectures that support sensor fusion and legal frameworks that keep pace with technological innovation, provides the basis for industry progress through higher levels of driving automation and the ultimate goal of the uncrashable car.

Of course, safety and driver automation aren’t the only areas of automotive design that are evolving rapidly. User experience – from ambiance and lighting to the ability to work on the move – is also high on the agenda, as we will see in our next blog.